Let's blame the developer who pressed deploy

Moving Past the Blame: Mastering the Root Cause Analysis

The title of this post was one of the reactions to the infamous CrowdStrike incident this summer. Airlines grounded planes. Emergency service numbers were down. TV stations could not broadcast. It was the largest global IT outage ever.

A common reaction to the incident has been “Who is to blame?".

I kept wondering if this was the most important question to ask. Unless you work for an insurance company, there are more pressing questions at hand.

Could the incident happen again—like tomorrow?

How could this happen in the first place?

Having worked in IT for over a decade, I have been part of “all hands on deck” incidents and their fallout. This blog post will focus on conducting an efficient root cause analysis. I will share insights from personal experience on how to ask the right questions. The goal is to fix the cause rather than treat the symptom.

Spoiler: A root cause analysis does not blame an individual action or person.

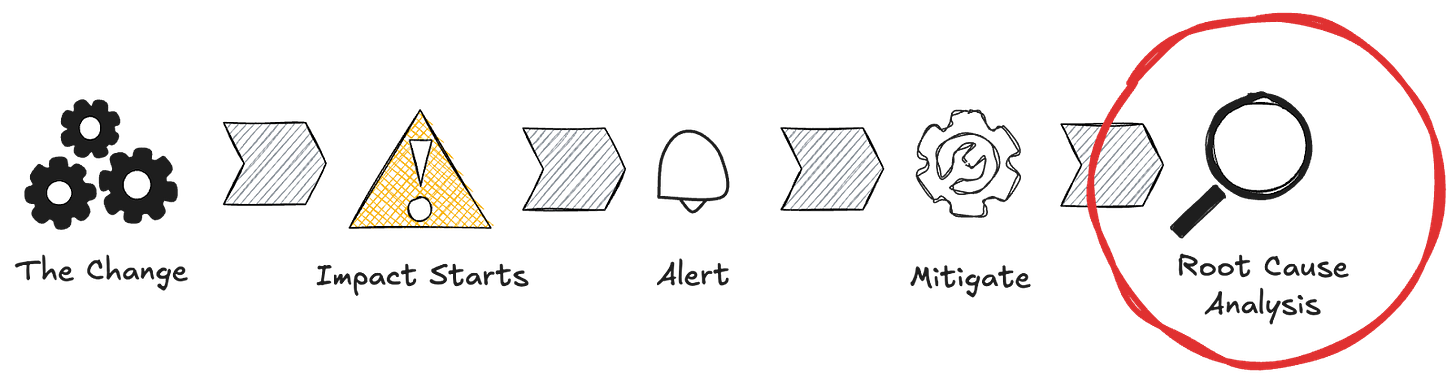

A Systematic Approach

We need a systematic approach to understand what exactly happened. I will explain how this process works so we are all on the same page. If you already know the post-mortem process, feel free to skip this section.

The first time I stumbled upon a post-mortem was back in 2017 when I could not push my changes to Git. GitLab had a full-service outage. The reputational loss for GitLab was huge. At the same time, GitLab did something remarkable. They reestablished trust by being transparent about the causes. They also detailed how they would change to prevent a similar issue in the future.

The first step of the process is to assign single-threaded ownership for a post-mortem case. Many companies assign this role to the manager of the team that released the defect. I will refer to this role as the post-mortem owner. Imagine walking in those shoes. As the owner, you would manage the process end to end. You would also track progress to ensure each step is done in due time.

The gist of the process is a meeting. In the post-mortem meeting, stakeholders identify the root cause and agree on corrective actions. Before the meeting, you should prepare a draft document that contains

Timeline: List all relevant events with timestamps. It is important to set expectations. Finger-pointing or naming individuals is not acceptable. Instead, use generic statements. For example: "The engineer on team X made change Y to mitigate customer impact." The timeline starts with the change that introduced the defect. It ends with the assignment of the post-mortem owner.

Impact: Describe the technical and business impact for the customer. What are the actions the customer was not able to take? How did it impact their business? Be specific. For example: "80% of requests failed for the API endpoint X in the period Y.". Add graphs that visualize customer impact, like P95 latency or error rates.

Resolution: Describe how the impact was mitigated. Has someone rolled back the change? Was there a manual workaround, like a server restart or database scale-up?

Share this document up front with all meeting participants. Let's be honest, though—only a few will actually read it. Hence, it's a good idea to allow time to read and ask questions at the start of the meeting. Everyone should have a deep understanding of what happened, when, and why.

Only afterwards you collaborate together on the remaining sections of the document:

Root Cause Analysis: The practice of asking 'why' repeatedly in the case of a problem is called "Five Whys.". Start with the symptoms. Then, create a causal chain to find the deeper root cause. The number five is arbitrary. There can be longer causal chains, but rarely shorter ones that are effective.

Identified root cause: State all identified root causes. List both the conditions and actions that caused the software to fail. Root causes are process or organizational issues.

Actions: Discuss and list identified corrective and preventive actions. We must assume that, despite good intentions, people will continue to make mistakes. So we need to build fault tolerance into our processes. Each post-mortem action has a clear owner assigned.

Insights

The theory is rather straightforward. Yet, in practice, there are so many subtle ways in which things can go south. Im sharing insights based on my personal experience in no particular order:

Rock Solid Event Timeline: As the post-mortem owner, you must know the timeline in detail. Yes, it can get complex and technical. If you have open questions, reach out to the engineers who worked on the incident mitigation. Make sure all key stakeholders understand it. I can't stress this enough. Without a shared understanding, stakeholders will have long, inconclusive debates. Take the time to clarify open questions for yourself and for other stakeholders. Resist the urge to jump to the root cause analysis right away.

Root Cause Analysis

Right Audience: Invite key stakeholders to the post-mortem meeting. Include engineers who caused and mitigated the incident. Additionally, invite subject matter experts in the affected domain and technology. Having a strong network inside the company will help. This way you might already know the right subject matter experts. More than that, if colleagues know and trust you, they are open to help. Be careful though! You are setting yourself up for a meeting marathon if you're inviting too many people. Check up front with potential stakeholders if you're not sure whom to invite. Share what you need from them and ask how they can contribute.

Keep your bias at bay: As the process owner, you will already have made your opinion on the cause. As will most other people joining the meeting. Learn something new and question your perspective. Do not provide any upfront proposal for the root cause in the provided draft document. Also, try not to defend your team or domain, but stay open to change. As the post-mortem owner, I've already run the 5 Whys in my head a dozen times before such a meeting. Yet, often the outcome was a different root cause than anticipated.

Synchronous: Organize the root cause analysis as a brainstorming meeting with an open outcome. Discover the causal chain together with key stakeholders during the meeting. Start with the symptoms and work your way backward. Avoid asynchronous, ever-prolonging ping-pong rounds. This way, you challenge and discuss every step on the spot without delay. A causal chain that key stakeholders agree on makes it harder to challenge the root cause later on. If someone disagrees with the cause for political reasons, refer them to the causal chain.

Bottom-Up Actions: Make decisions close to the information. If possible avoid top-down mandates, introducing managerial approvals or manual checks "just to be safe.". These create process bottlenecks. Instead, make the hard effort to come up with a process fix or provide automation to avoid human error.

Measure the Process: Improve the process without guessing. Define SLAs for how long the entire post-mortem process should take. Measure the cycle time to identify and remove bottlenecks.

As abstract as possible, as technical as needed: I stressed the importance of having a shared understanding of the timeline. This is only possible if you make your point clear to non-techies as well. You can expect your manager to be somewhat technical. Make sure managers understand the document and can support you with the actions.

The Outcome

CrowdStrike has shared the public root cause analysis of the incident. Here is a summary of the identified corrective actions and contributing conditions:

Actions:

Gaps in Testing Strategy: They talk in detail about how they add checks to verify input parameters at compile and runtime for "Rapid Response Content.". Furthermore, they elaborate on how they expand integration tests.

Staged Deployment: Changes that can cause system crashes must not be rolled out to all customers at once. A gradual rollout helps to limit the blast radius to a few customers in case of failure.

Conditions:

Kernel Space: Windows requires advanced security products to run in kernel space instead of user space. In case of an issue, it is not only the security product that crashes, but the entire operating system. CrowdStrike will collaborate with Microsoft to enable more security features in user space.

After reading CrowdStrike's root cause analysis, another action comes to mind: treat every change as a code change. After all, it was a configuration change (channel file) that triggered the incident.

TL;DR

In this blog post, I shared a systematic approach to fix the cause rather than treat the symptom of an incident. We discussed the "5 Whys" method to ask the right questions and lead us to the root cause. Remember to consider both actions and conditions that contributed to the software failure. As we saw in the CrowdStrike incident, multiple root causes are common. Finally, corrective actions are identified to prevent the issue from happening again without any blame.